Starting today - you can fine tune, evaluate, deploy and continuously improve rerankers through the Datawizz Platform. This unlocks easy & streamlined continuous learning for feeds, search and RAG systems - letting you close the loop faster and translate real world experiences into model improvements. Docs here!

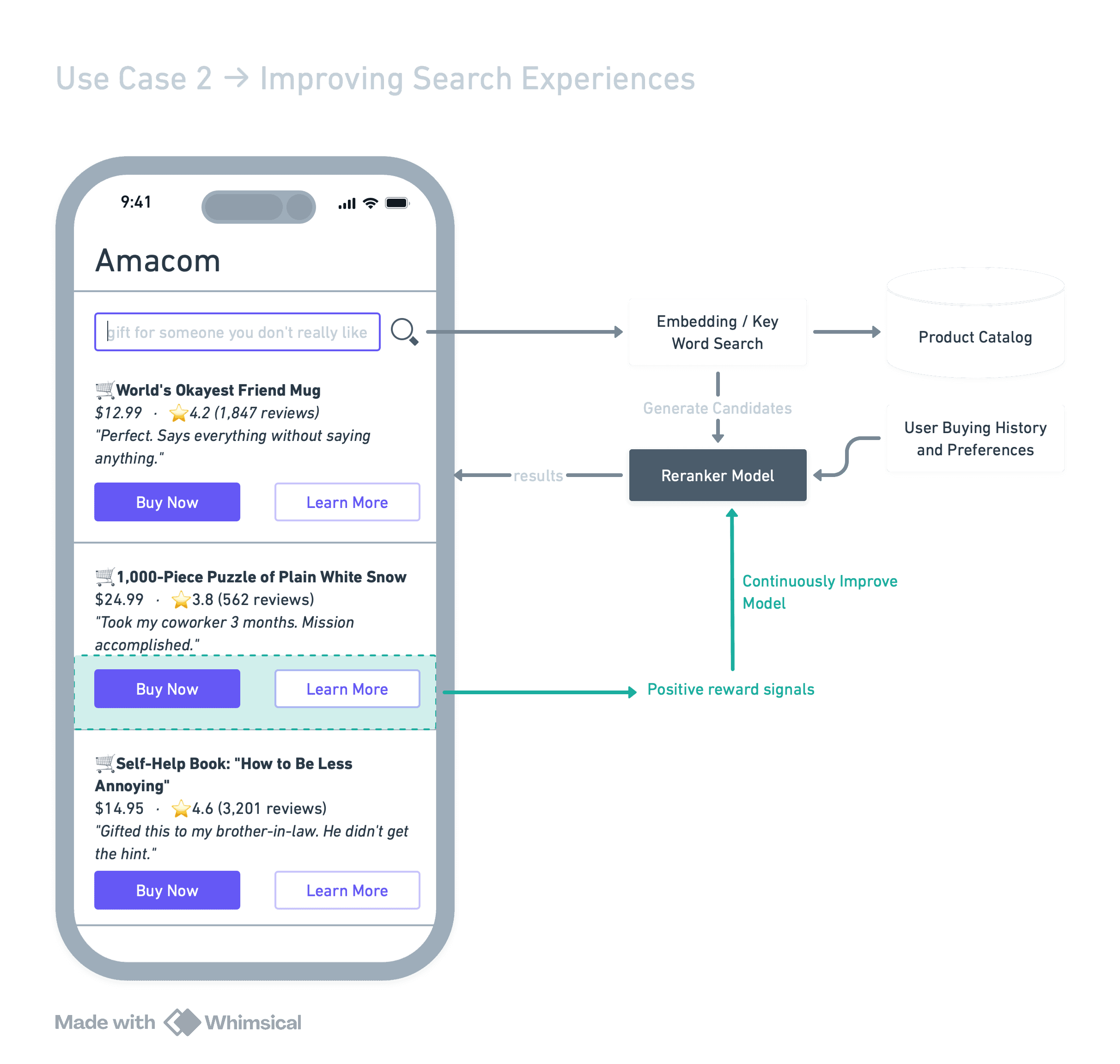

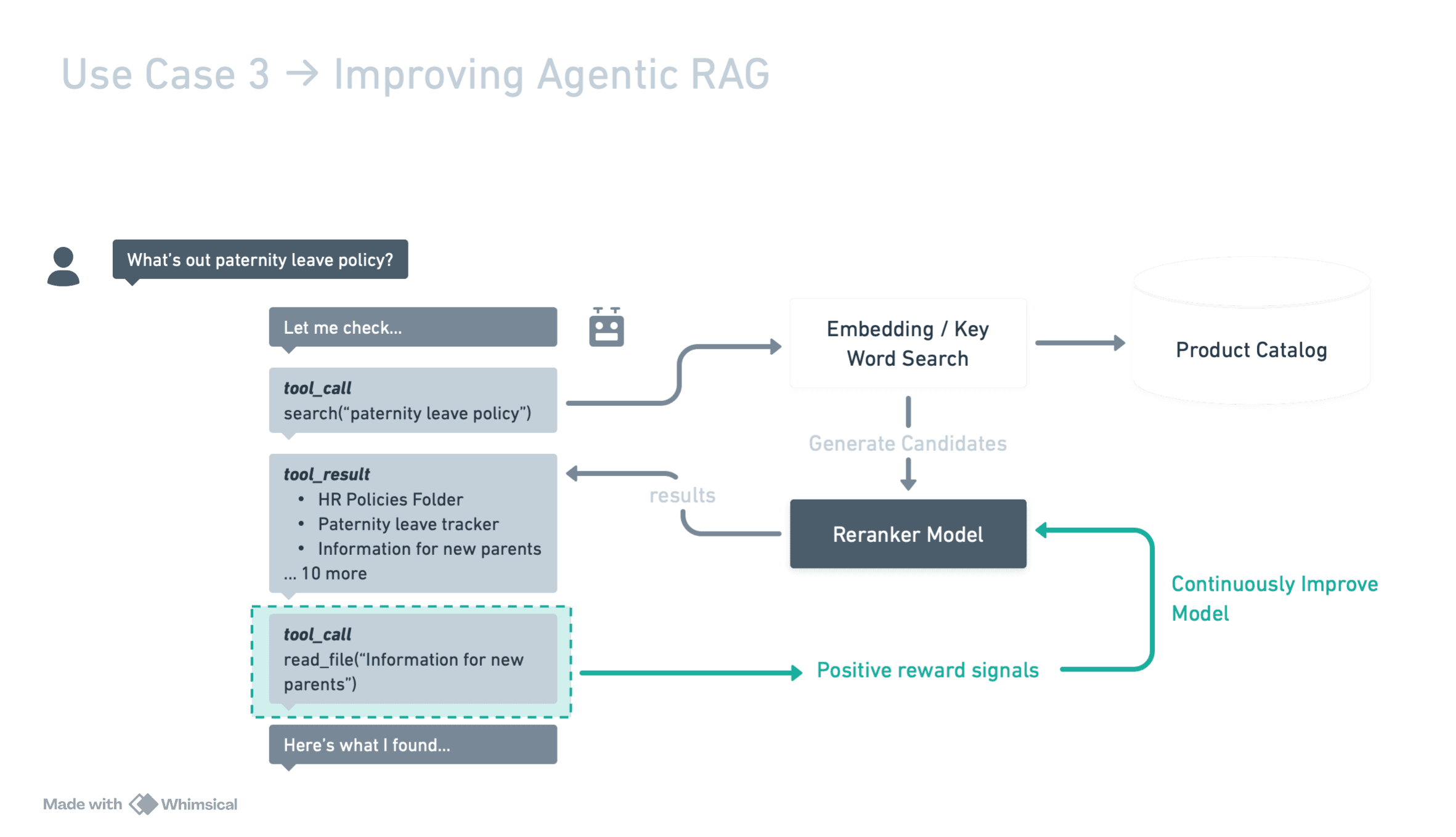

In the age of AI agents and RAG applications - finding the right thing is a critical challenge for humans and agents alike, and search & recommendation quality has a marked impact on application performance and agent efficacy.

In ecommerce, an Algolia/Digital Commerce 360 study showed 2x improvement in conversion just by improving search performance.

In content feeds, a Netflix research paper showed up to 16% reduction in engagement when replacing their personalized recommender with a non-personalized one.

For agents, Databricks showed that adding a reranker improved recall@10 from 74% to 89% — a 15-point bump on their enterprise RAG benchmarks — while also reducing LLM hallucinations by 35%.

Reranker models sit at the core of this challenge — filtering and organizing information to help us get to the right thing faster. Every % improvement in their accuracy means higher user conversion, less agent context rot and more time spent on the things that matter.

Improving and customizing these reranker models can have a marked improvement on their results. Leading companies have already demonstrated that specialized fine-tuned rerankers can significantly outperform their generic peers. Fin.ai - for example - achieved a significant increase in case resolution rates in their production environment by launching a custom reranker model (read here).

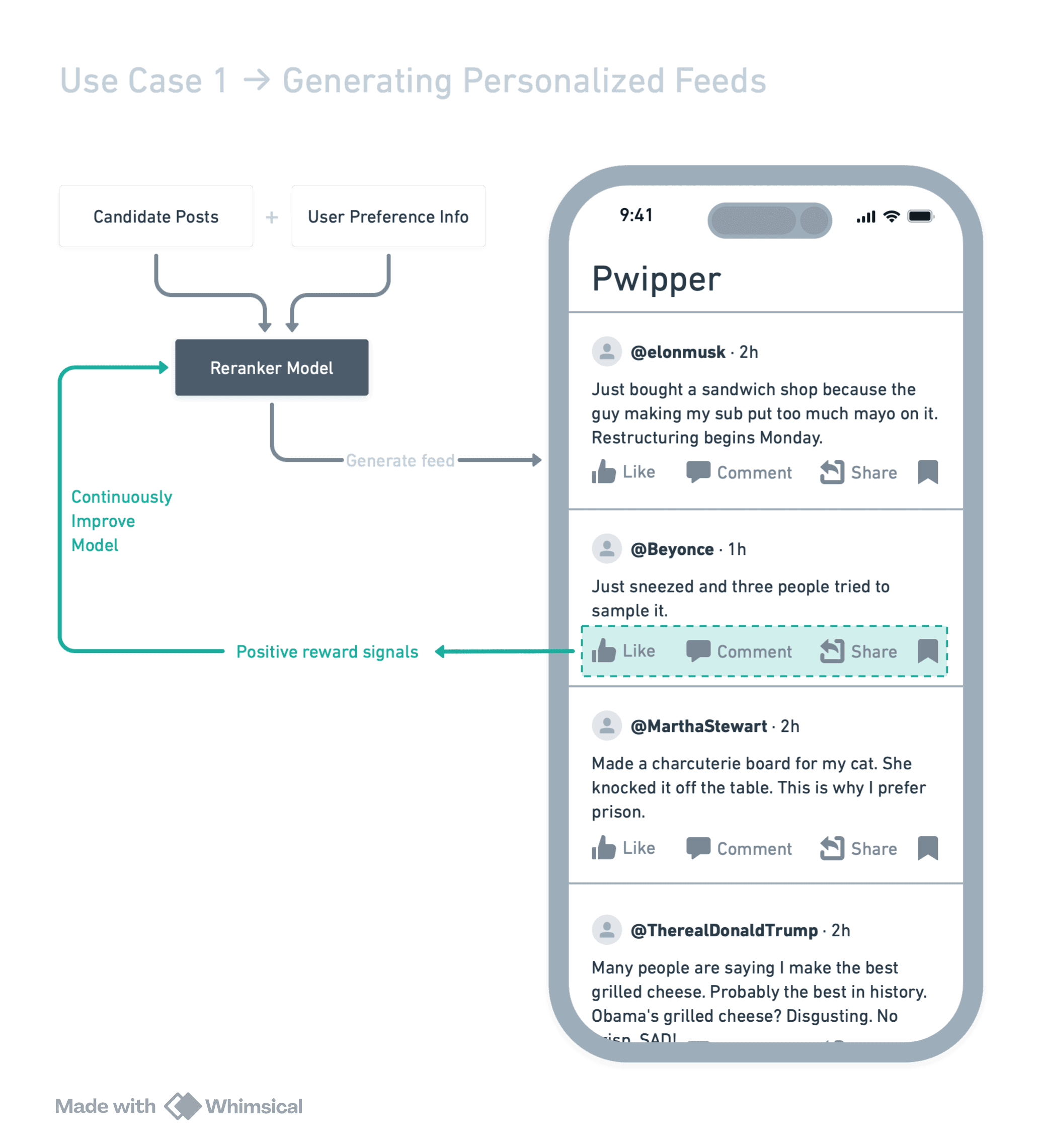

rerankers are also ripe for continuous learning - leveraging runtime experiences to continuously improve and evolve the model. Every time you generate results and present them to the user - you get immediate feedback signals. What did the user click? Which posts did the user engage with? Which files did the agent actually open?

With Datawizz, you can easily collect these signals and translate them into instant model improvements. We recently launched continuous learning to help companies close the loop, and today you can continuously train reranker models on Datawizz.

Training rerankers with Datawizz

Getting started with reranker fine-tuning on Datawizz takes just a few steps.

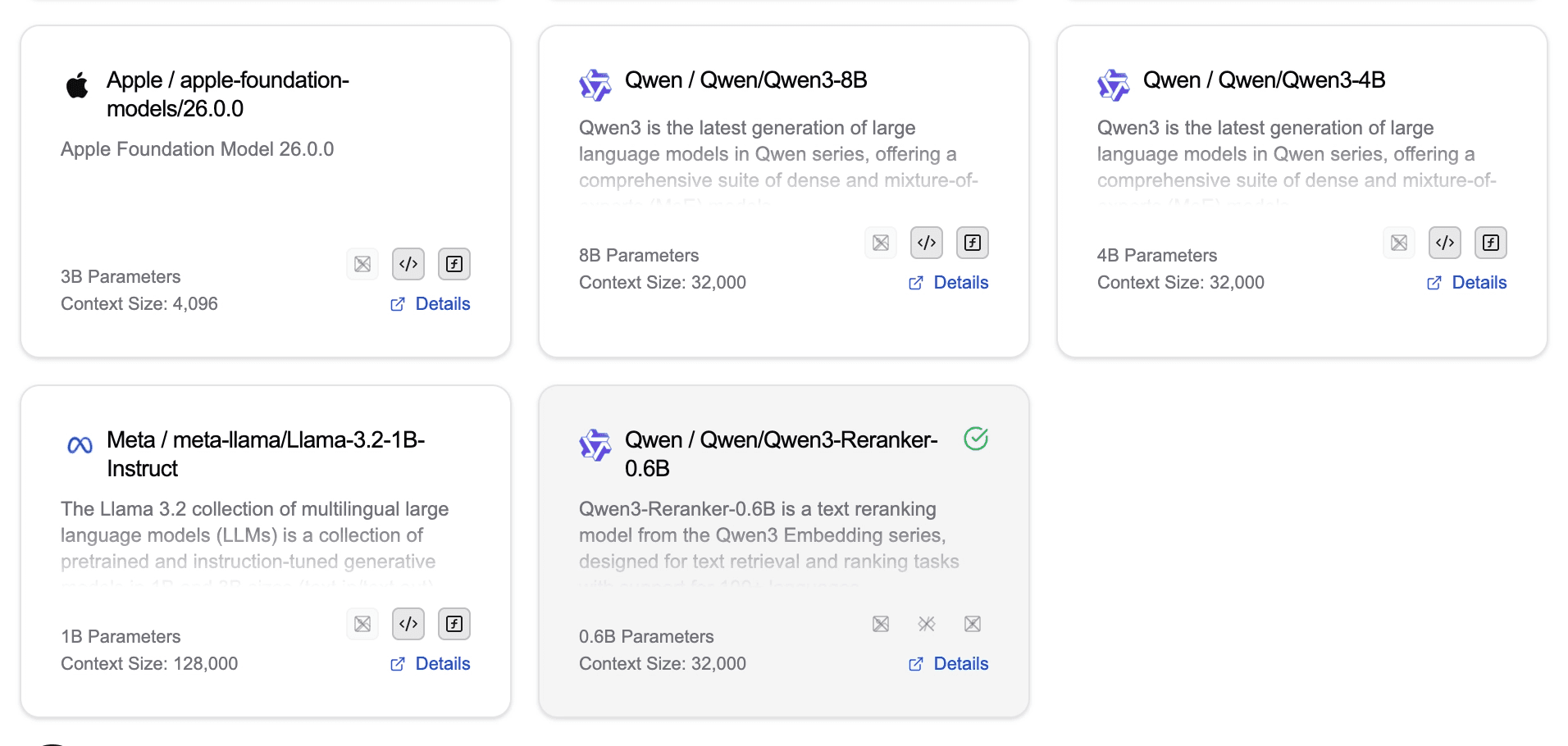

First, navigate to your project and select Models. Choose a reranker as your base model — we currently support Qwen/Qwen3-Reranker-0.6B, with more models coming soon.

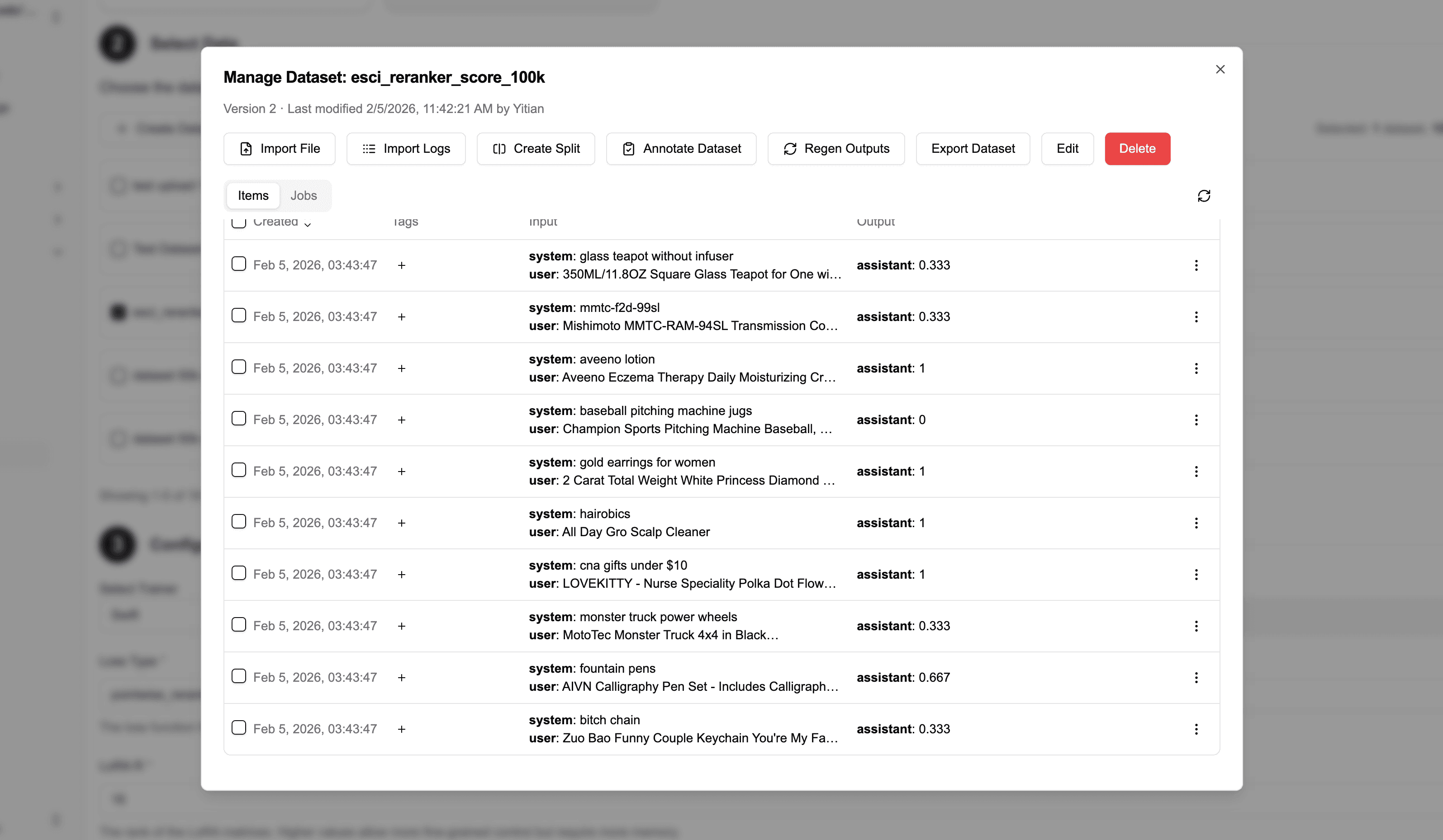

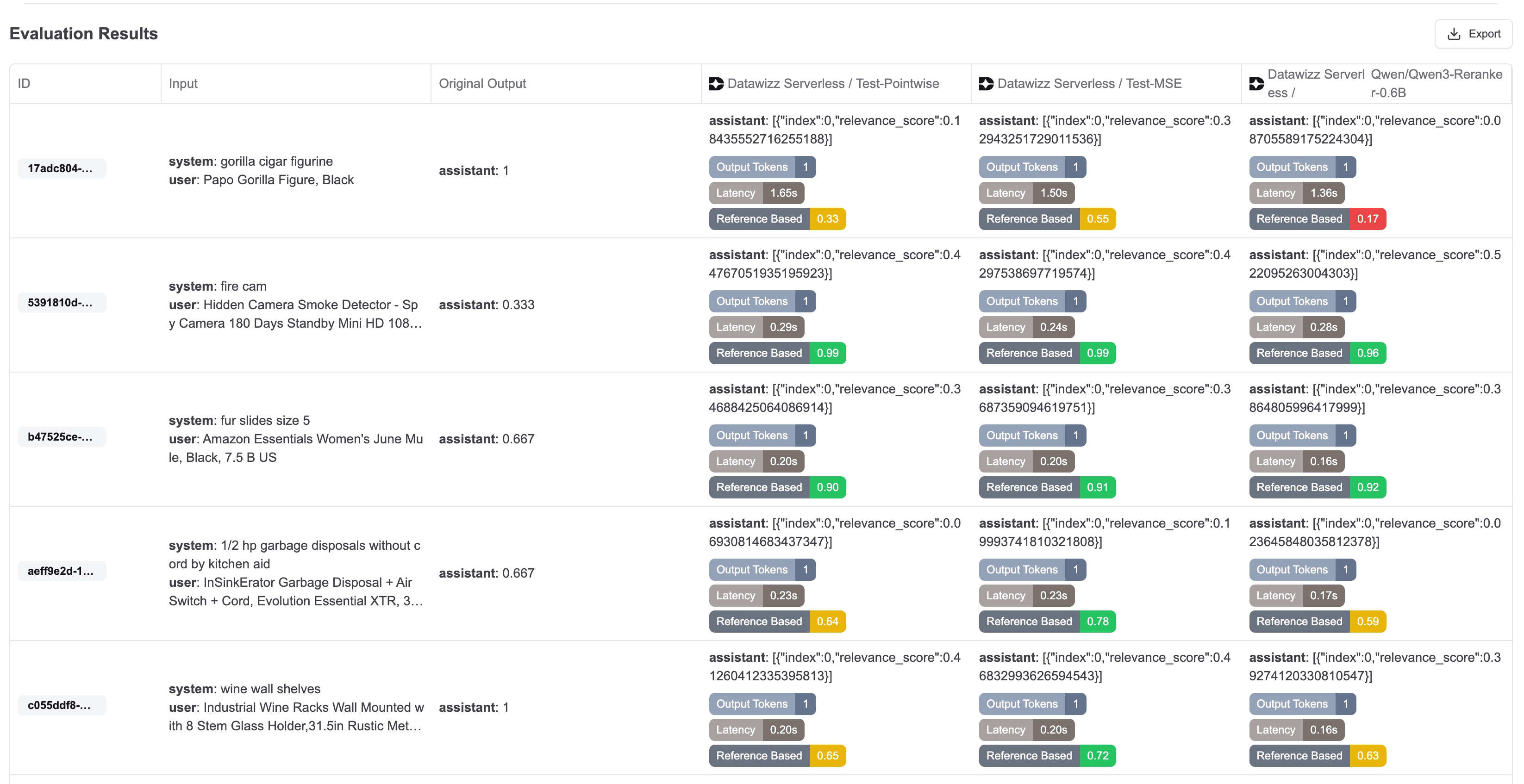

Next, select the training dataset you'd like to use. In this example, we're using ESCI (Amazon's Shopping Queries Dataset) — a well-known benchmark for search relevance that contains real-world query-product pairs with graded relevance labels, making it ideal for training and evaluating rerankers.

Your dataset can have binary outputs (Yes and No) or numeric values in [0.0, 1.0] .

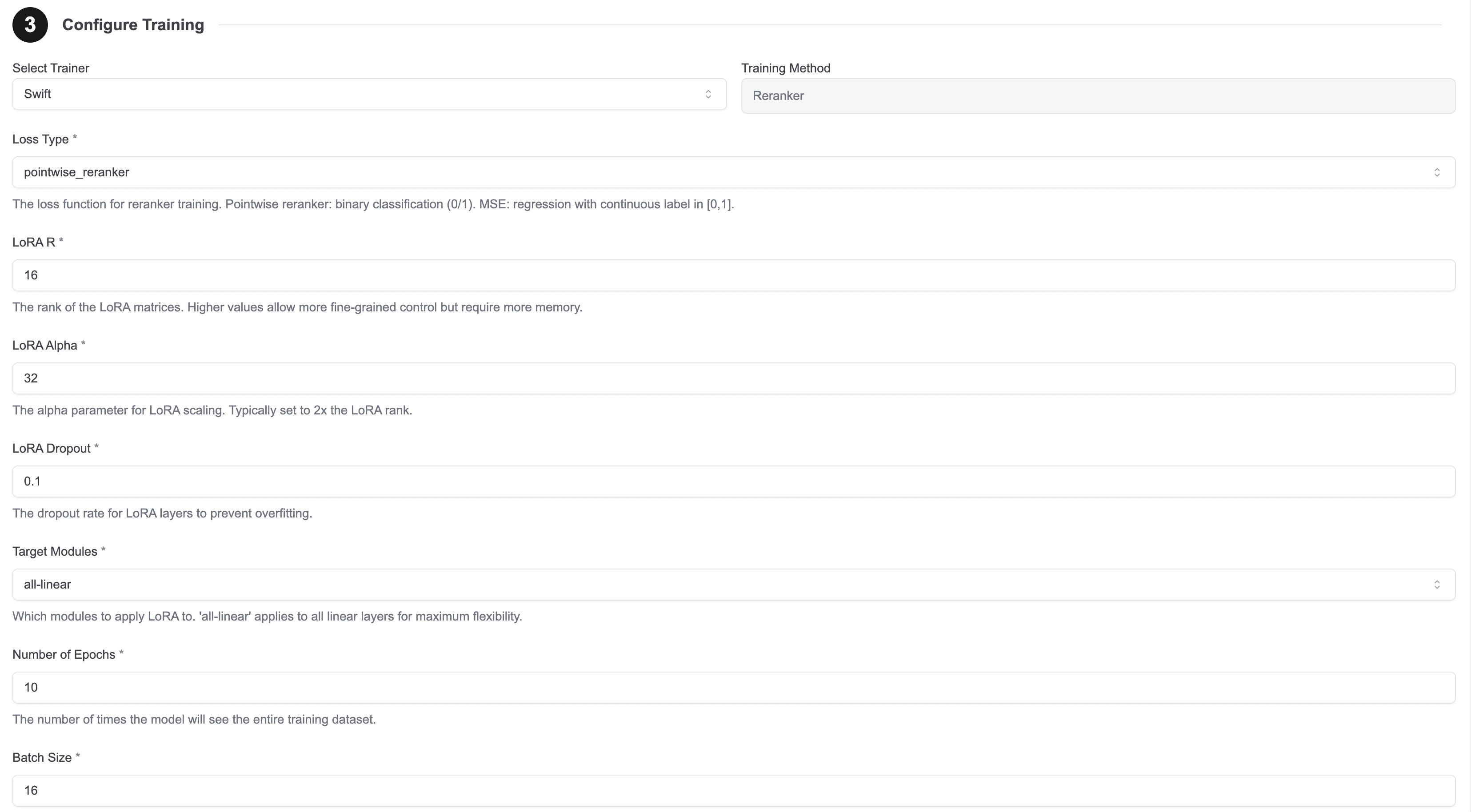

Datawizz handles reranker training through our ms-swift based trainer. You'll find all the standard fine-tuning parameters you'd expect — LoRA rank, alpha, learning rate, epochs, and more — pre-configured with sensible defaults so you can start training immediately or customize as needed.

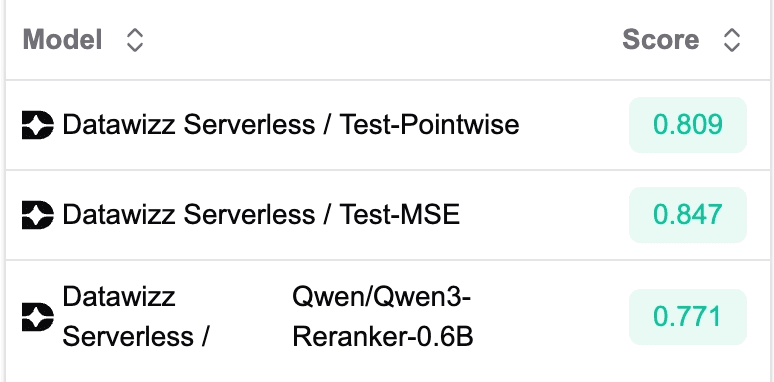

Datawizz currently supports two loss functions for reranker training:

MSE: For continuous relevance scores (e.g., click-through rates, 0.0–1.0 scores)

Pointwise: For categorical relevance labels (e.g., relevant/irrelevant, yes/no…)

Choose based on your label format — continuous scores → MSE, discrete categories → Pointwise.

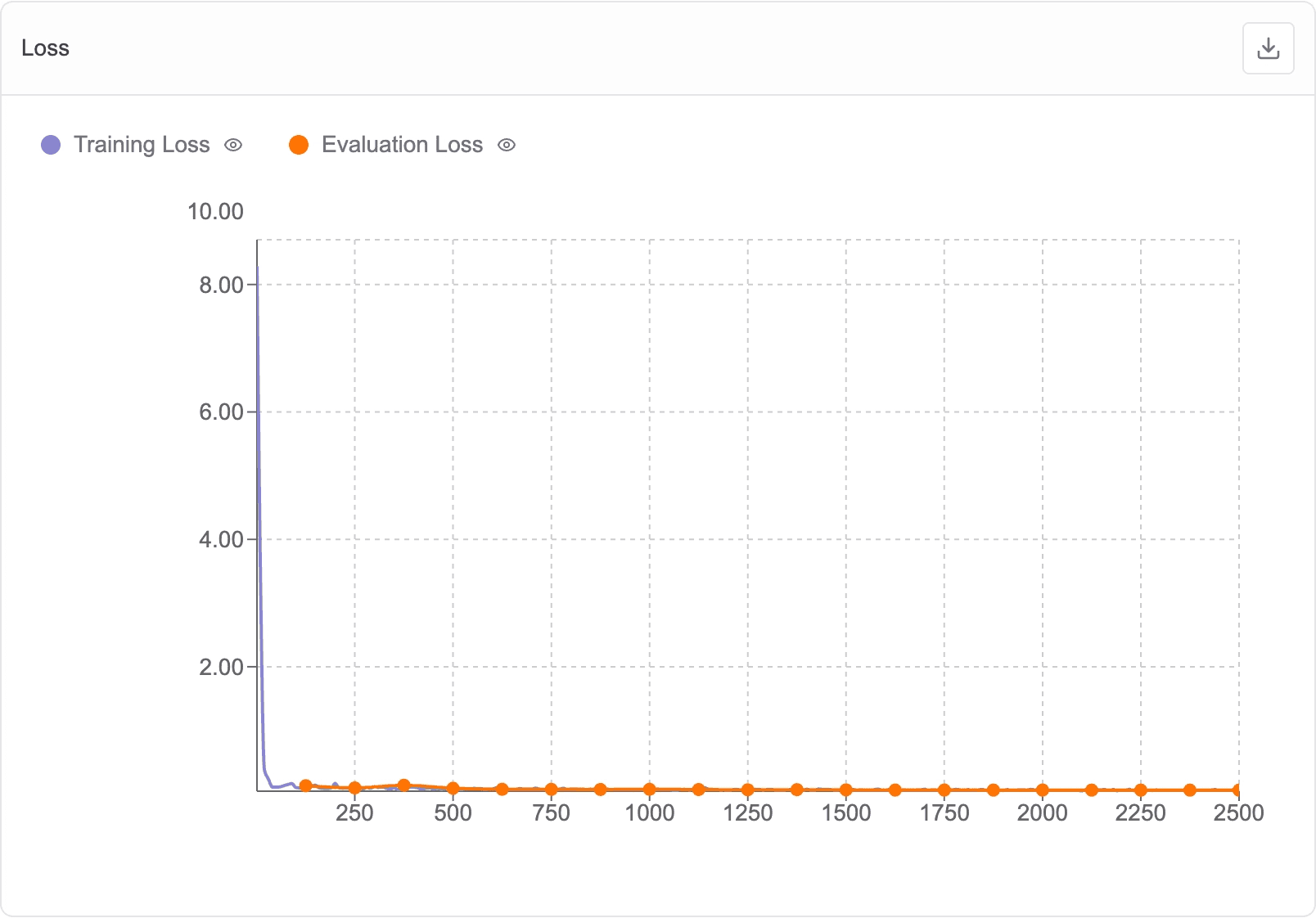

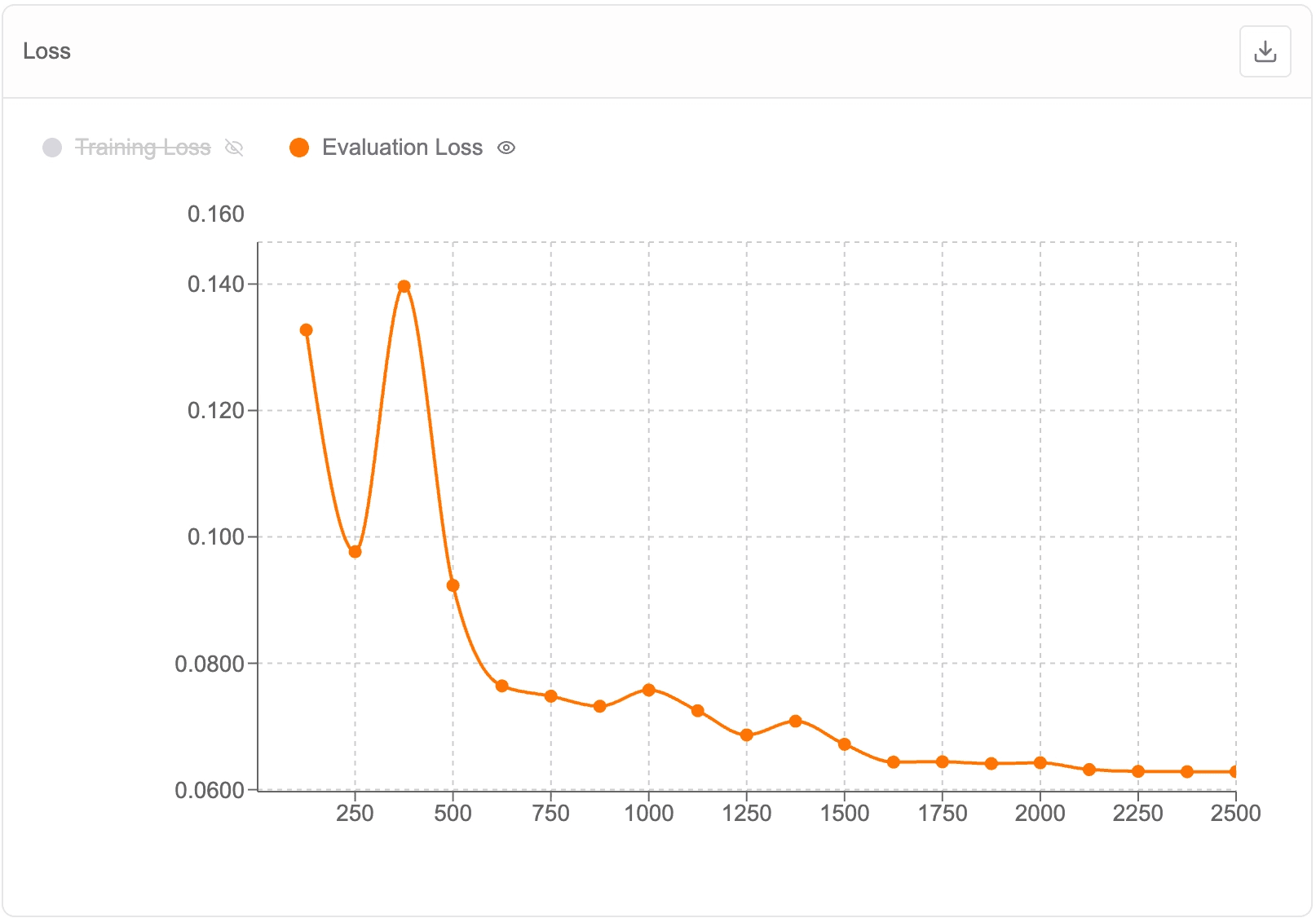

Once configured, hit Start Training and Datawizz takes care of the rest — distributed training, checkpointing, and evaluation all run automatically. When training completes, you can evaluate your model against held-out data and deploy it directly to a serving endpoint, all within the same platform.

We created a MSE evaluator under reference-based evaluations — this compares the model's predicted relevance scores against ground-truth labels, giving you a clear measure of how well your reranker has learned to score query-document pairs.

Continuous Learning for Rerankers

Datawizz makes it possible to capture user signals from your runtime and use them to retrain and improve your reranker model.

Whenever Datawizz generates a response, it sends back a unique response UID and feedback URL. You can send back signals - either negative or positive, which feed directly into the training pipeline.

In a reranker use case, these signals can be based on user interactions:

A click on a feed item is a positive feedback signal

A quick swipe away from a feed item is a negative one

An agent opening a RAG result is a positive signal

A user clicking / buying a search result is a positive signal

See diagrams below to see these exact workflows:

Ready to start improving your reranker with real-world feedback? Get started on Datawizz today or book a demo to see continuous learning in action.