Continuous Learning: Closing the Loop Between Runtime and Training

Most teams building specialized models follow a familiar pattern: collect data, fine-tune, evaluate, deploy, move on.

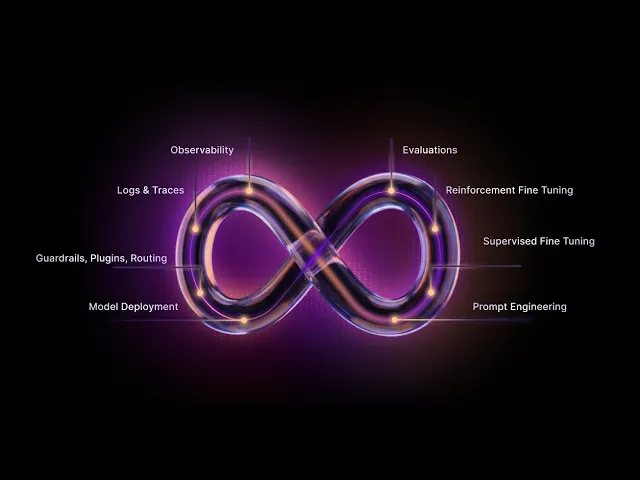

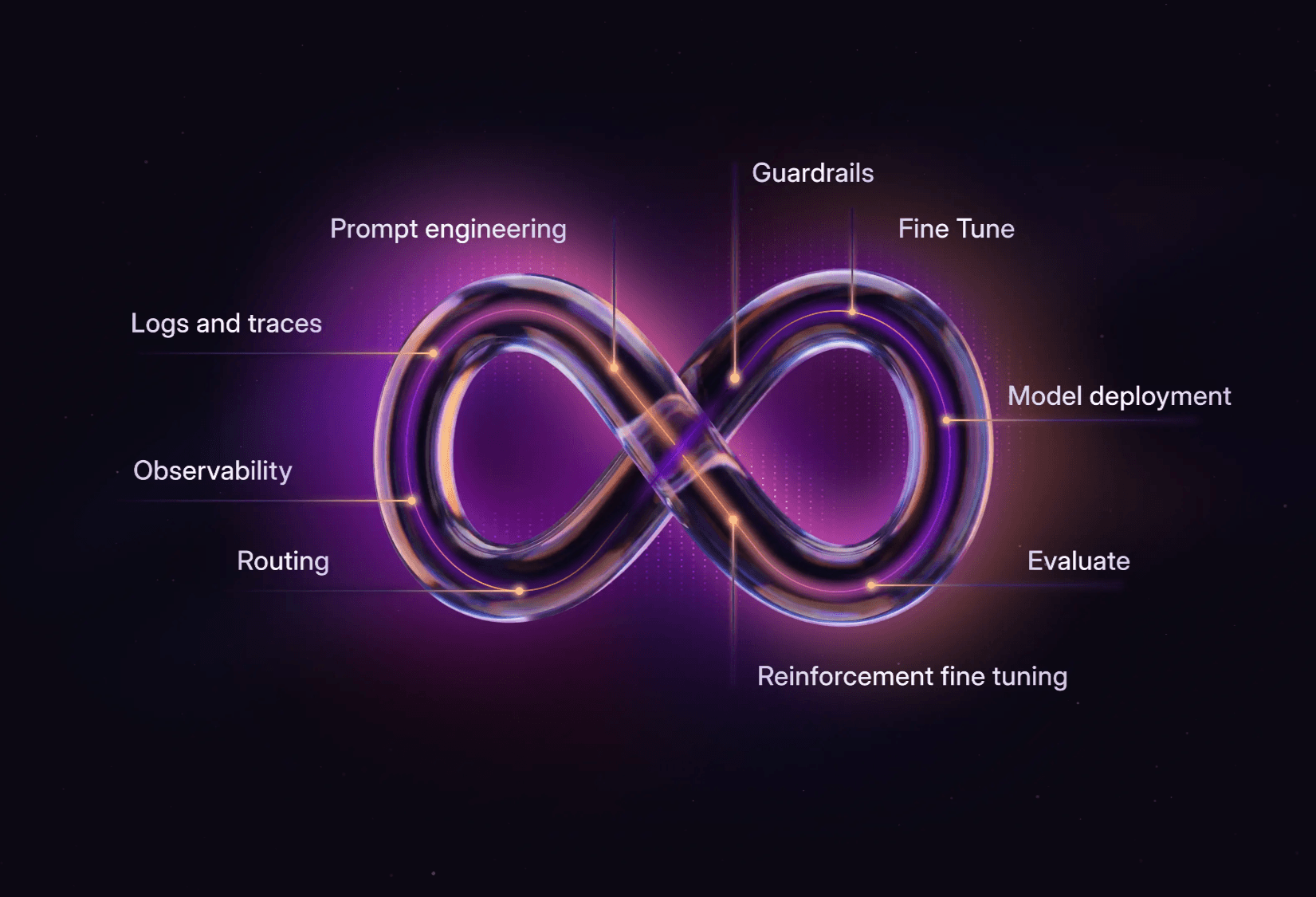

Once the model is in production, you bolt on the runtime stack. Observability to monitor performance. Logging and traces to debug issues. Guardrails to catch bad outputs. Maybe routing to manage multiple models.

Then three months later, a better base model drops, the use case evolves, or you're sitting on ten times the production data you started with. So you rebuild the pipeline from scratch.

The work doesn't compound. Each iteration is episodic - disconnected from the last.

That's why we built Continuous Learning in Datawizz.

The problem

Training and runtime live in separate worlds. Your training infrastructure is in one place, your inference stack in another. One generates signal, the other ignores it.

This separation creates specific friction points:

Production signals don't flow back to training. Requests, traces, and outcomes get logged somewhere, but rarely in a format useful for fine-tuning. The data exists; it's just not connected.

Failures become tickets, not training signal. When your model gets something wrong, you open a bug, maybe add a test case, and hope someone remembers to include it in the next training run.

Evaluation runs on stale distributions. Your test sets represent what the world looked like when you built them, not what's hitting your endpoints today.

Fine-tuning is calendar-driven. You retrain because it's been a quarter, not because the model actually needs it.

This is what happens when training and serving are architecturally separate. Nothing compounds.

How Continuous Learning works

Continuous Learning bridges the two. It captures production signals, normalizes them into training-ready data, and gates what flows into your next training run.

The pipeline

Capture production signals. Prompts, outputs, tool calls, traces, and downstream outcomes from your existing app events, tracing infrastructure, and review tools.

Normalize into a consistent schema. Raw logs become training-ready data: inputs, outputs, metadata, feedback signals, and which model produced what.

Select high-value candidates. Surface what matters: repeated failures, low-confidence outputs, human overrides, distribution drift.

Convert outcomes into training signals. Labels for SFT. Preference pairs when users choose one output over another. Reward signals when downstream tasks succeed or fail.

Train with full traceability. Run SFT, preference optimization, or RL-style updates. Every artifact is versioned and tied back to the signals that produced it.

Gate releases on real distributions. Eval against your offline suite plus production-representative slices. Roll out gradually.

Example: turning production outcomes into training

Consider a support agent model.

A user edits the suggested response before sending → preference signal (edited version preferred)

A ticket reopens within 24 hours → negative outcome (reward signal)

"Billing cancellation" traffic spikes after a policy update → distribution shift (slice to monitor)

Continuous Learning turns those events into a structured dataset, trains a targeted update, and gates the release against the "billing cancellation" slice plus your baseline evals - without rebuilding everything from scratch.

The hard parts

Continuous learning has well-known failure modes. A usable system has to handle them.

Noisy signals. Define quality gates before data enters the pipeline: confidence thresholds, human verification, outcome validation.

Privacy and compliance. Not everything should become training data, and you need to prove that through redaction, sampling policies, and the ability to exclude data by rule.

Overfitting to recent traffic. Holdout sets and evaluation across different segments detect when you're optimizing for a pattern that won't last.

Drift detection. Monitor for shifts between what you trained on and what's actually hitting production, and get alerted before it impacts performance.

Regressions. Every model update is versioned, with staged rollouts and the ability to roll back.

Cost. Budget controls and incremental runs keep spend predictable. "Continuous" is configurable, not always-on.

The goal isn't to retrain more often. It's to make retraining evidence-driven and low-friction.

What this enables

When a new base model drops, you're not starting from scratch. You have a stream of versioned, production-derived signals - preferences, outcomes, high-value slices - that can be applied to the new model. The transfer isn't automatic (different architectures may require adjustment), but your data assets carry forward.

When your use case evolves, the same feedback infrastructure that was improving the model yesterday continues working on the new distribution today.

Your model improves as a function of usage, not calendar time.

If you want to see what this looks like on a real workflow, get started or check out our documentation. We're happy to walk through an end-to-end pipeline on your use case.